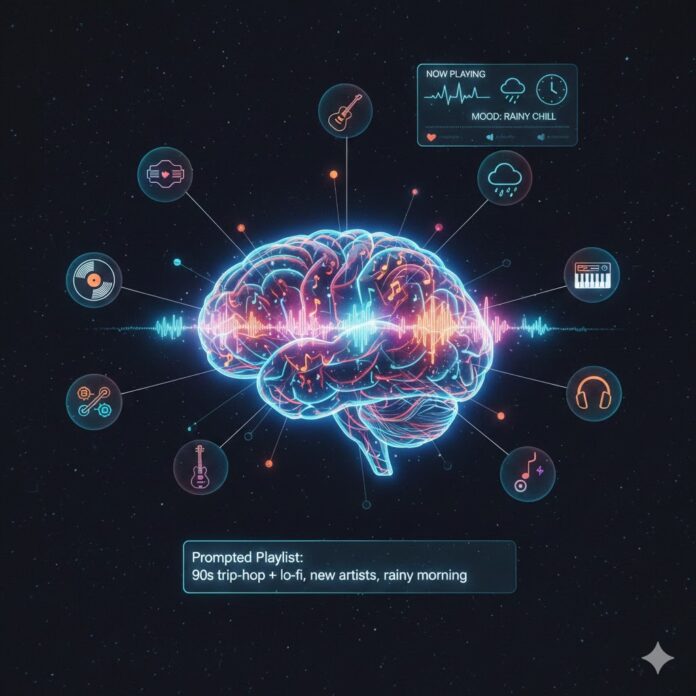

We’re officially in the era of AI curation, and it’s changing the “vibe” of our daily lives in ways that feel a lot less like a math equation and a lot more like a digital best friend.

Here is what’s actually happening behind the scenes of your headphones.

1. You Can Now “Talk” to Your Playlists

Remember when you had to spend hours digging through sub-genres to find the perfect “Dark Academia” or “Synthwave” tracks? Those days are gone.

Now, we have Prompted Playlists. You can literally tell your app:

“I’m driving through the desert at sunset and I want to feel like the main character in a 70s heist movie—but make it modern.”

Instead of just scanning for keywords, the AI actually understands the feeling of a heist movie. It’s using Large Language Models (LLMs) to bridge the gap between human language and audio frequencies. It’s not just searching; it’s translating your mood into sound.

2. Breaking Out of the “Echo Chamber”

One of the biggest complaints about old algorithms was the “filter bubble.” If you listened to one Taylor Swift song, the AI decided you were a “Swiftie” forever and stopped showing you anything else.

The new AI models are smarter. They’re programmed with a bit of “chaos” built-in. They’ve learned that humans get bored, so they’ve started prioritizing serendipity. They look for “bridge tracks”—songs that share the same emotional DNA as your favorites but belong to a completely different genre. It’s why you might suddenly find a Japanese Jazz fusion track sitting right next to your favorite indie-rock anthem.

3. Your Music is Literally Reading the Room

In 2026, your playlist isn’t a static list of songs; it’s a living thing. Thanks to better integration with our wearables and phones, the music adapts to your environment in real-time:

-

The Pace Maker: If your smartwatch sees your heart rate spiking during a run, the AI won’t just play a “fast” song—it’ll find a track that matches your specific strides per minute.

-

The Weather Man: It sounds like science fiction, but if a thunderstorm rolls in, your “Discovery” queue might subtly shift toward more atmospheric, “moody” textures without you even asking.

4. What Does This Mean for the Artists?

If you’re a creator, the “SEO” of music has changed. It’s no longer enough to just be “Rock” or “Hip-Hop.” The AI is looking for contextual metadata.

The algorithm is “listening” to the textures of your songs and reading what people say about you on social media. To get playlisted now, an artist needs to fit a scenario. Being the “perfect song for a late-night rainy drive” is now more important than being a “Top 40” hit.

The Bottom Line

We’re moving away from “The Algorithm” and toward “The Assistant.” Music curation is becoming a collaboration between us and the tech. It’s less about being told what to like and more about having a tool that helps us find the soundtrack to our own lives.

🔥 Limited Time: Get 55% OFF All Plans - Ends in:

🔥 Limited Time: Get 55% OFF All Plans - Ends in: